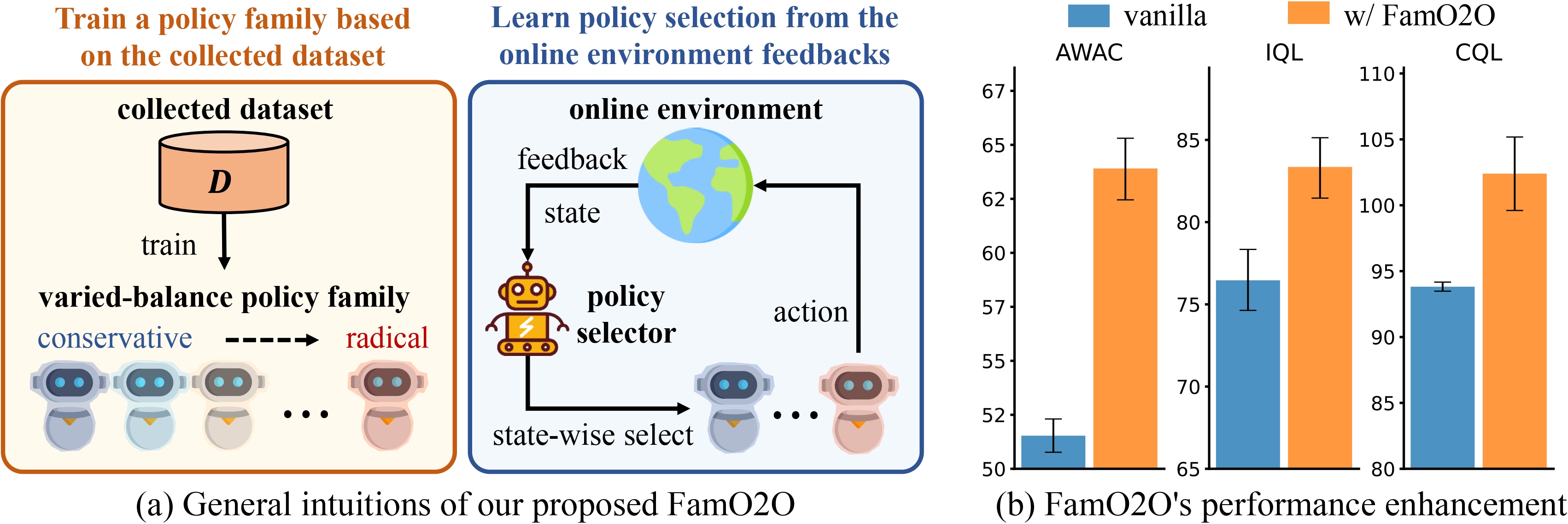

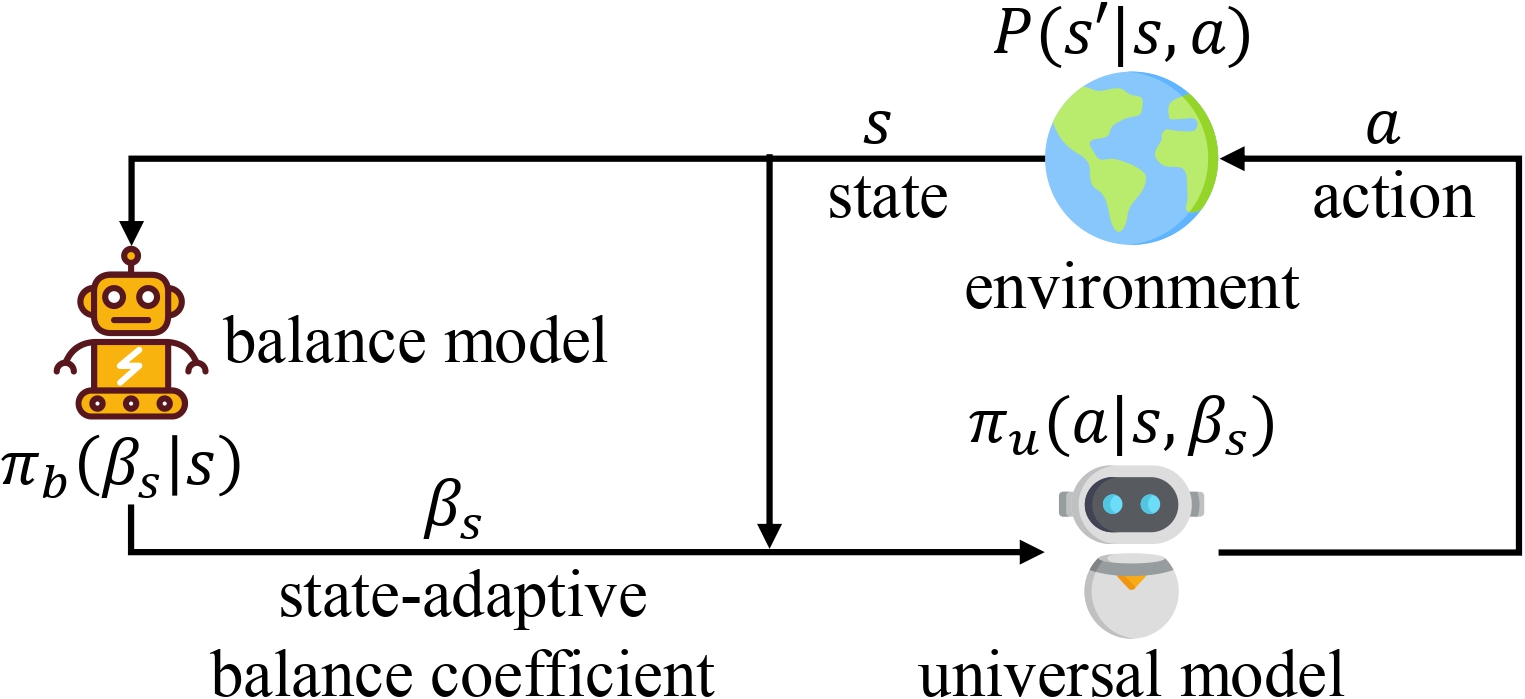

We propose FamO2O, a simple yet effective framework that empowers existing offline-to-online RL algorithms to determine state-adaptive improvement-constraint balances. FamO2O utilizes a universal model to train a family of policies with different improvement/constraint intensities, and a balance model to select a suitable policy for each state.

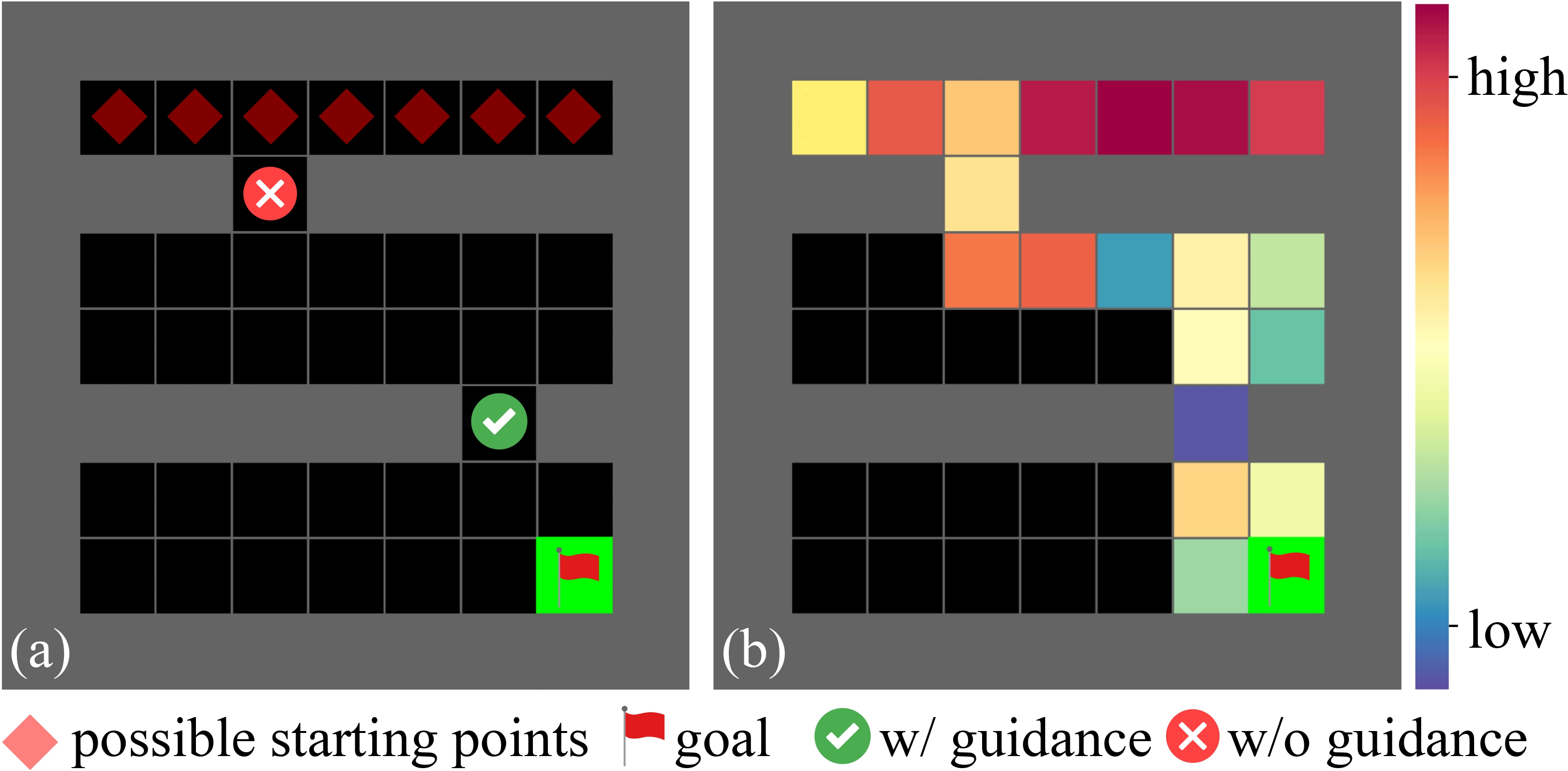

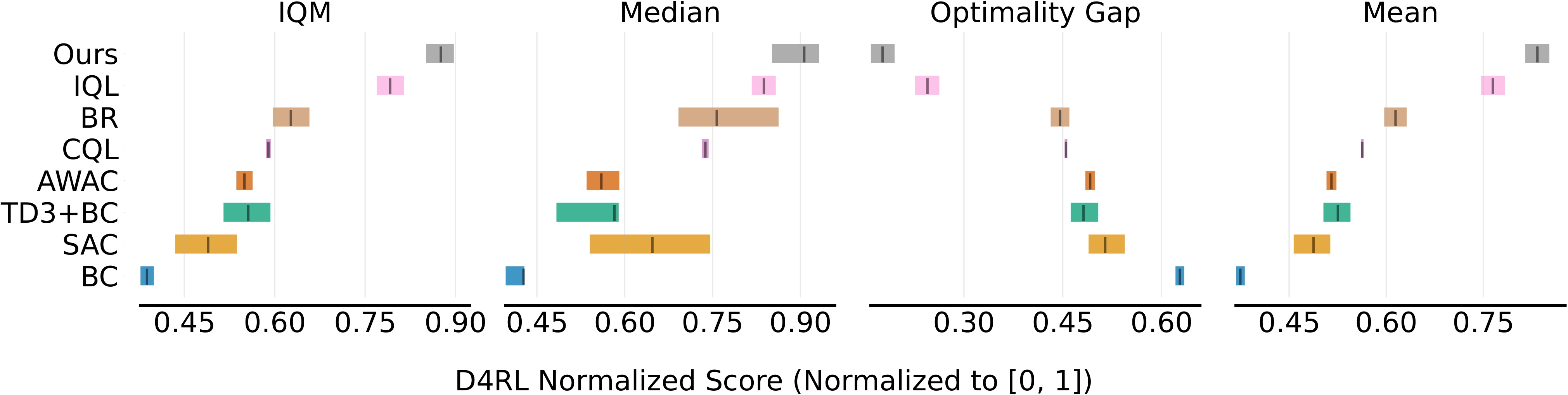

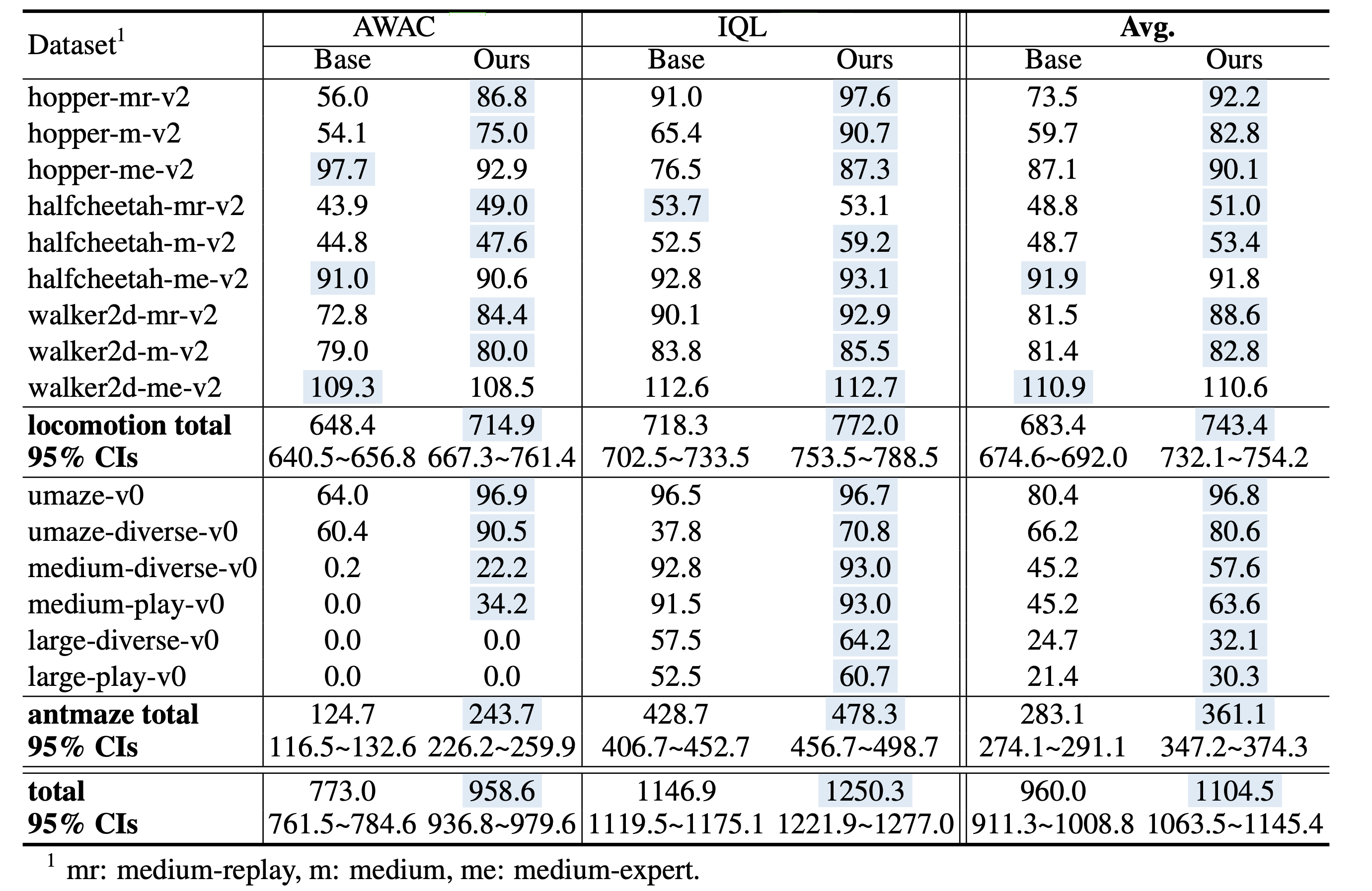

Offline-to-online reinforcement learning (RL) is a training paradigm that combines pre-training on a pre-collected dataset with fine-tuning in an online environment. However, the incorporation of online fine-tuning can intensify the well-known distributional shift problem. Existing solutions tackle this problem by imposing a policy constraint on the policy improvement objective in both offline and online learning. They typically advocate a single balance between policy improvement and constraints across diverse data collections. This one-size-fits-all manner may not optimally leverage each collected sample due to the significant variation in data quality across different states. To this end, we introduce Family Offline-to-Online RL (FamO2O), a simple yet effective framework that empowers existing algorithms to determine state-adaptive improvement-constraint balances. FamO2O utilizes a universal model to train a family of policies with different improvement/constraint intensities, and a balance model to select a suitable policy for each state. Theoretically, we prove that state-adaptive balances are necessary for achieving a higher policy performance upper bound. Empirically, extensive experiments show that FamO2O offers a statistically significant improvement over various existing methods, achieving state-of-the-art performance on the D4RL benchmark.

For a detailed overview of our study, including both qualitative and quantitative results, please refer to our paper.

@misc{wang2023train,

title={Train Once, Get a Family: State-Adaptive Balances for Offline-to-Online Reinforcement Learning},

author={Shenzhi Wang and Qisen Yang and Jiawei Gao and Matthieu Gaetan Lin and Hao Chen and Liwei Wu and Ning Jia and Shiji Song and Gao Huang},

year={2023},

eprint={2310.17966},

archivePrefix={arXiv},

primaryClass={cs.LG}

}